Imagine this: It’s been a long day, you’re about to sign-off and head home for the day. You get a video call from your boss. He asks you to wire $25 million to another account; it’s urgent. It's a lot of money, but it's not unusual for your company. You have no reason to doubt that you are talking to your boss, the CEO.

As a financial officer, you’re authorized to transfer funds and have access to the company’s accounts; you send the money.

Except it wasn’t your boss.

It was an interactive deepfake video call.

An AI-generated (artificial intelligence) version of your boss, created by criminals, and $25 million has now vanished from the company’s finances and into untraceable accounts.

This isn’t an imaginary scenario. It really happened: “Hong Kong police have launched an investigation after an employee at an unnamed company claimed she was duped into paying HK$200m (£20m) of her firm’s money to fraudsters in a deepfake video conference call.”

Could you spot a deepfake video, image, or email from a real one? It’s getting harder and harder. This is why deepfake prevention is so important.

Since deepfakes are real and present, could your KYC software check a real human from a few seconds of an AI-generated deepfake video?

Organizations need robust deepfake technology to protect themselves against fraudulent and criminal behavior today, not tomorrow or next quarter.

In this article, we explain why you need deepfake prevention, how deepfakes work, and the risks associated with deepfakes for KYC (Know Your Customer) and AML (Anti-Money Laundering).

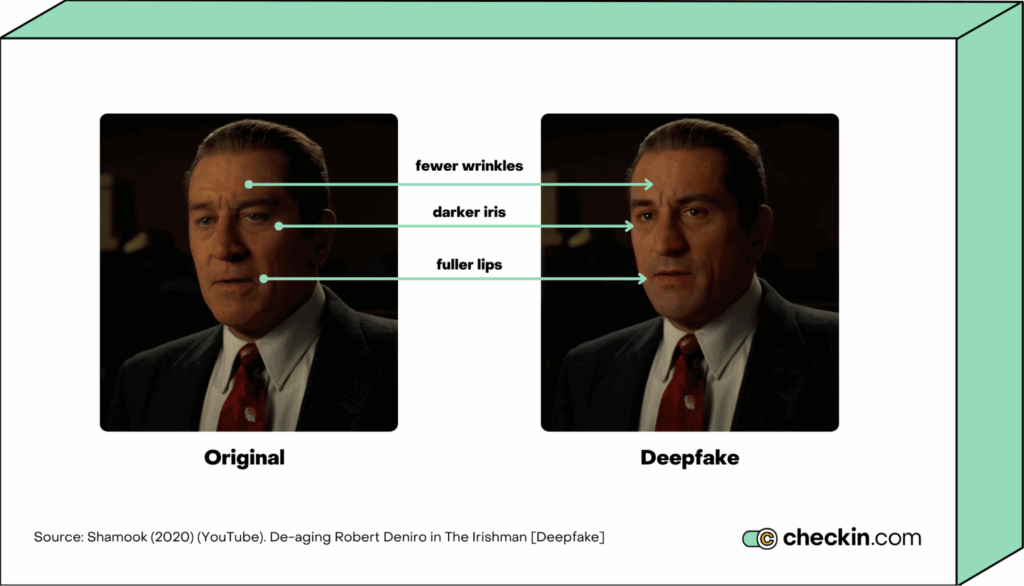

What are deepfakes?

Deepfakes are artificially-generated pieces of content designed to weaponize disinformation, spread lies, and cause harm and abuse. Naturally, cybercriminals have also started using deepfakes to scam and defraud people and companies.

As AI models get more sophisticated, deepfakes ⏤ videos, images, audio, synthetic documents (like IDs), and emails ⏤ are getting harder to detect without technology playing a role in counteracting and verifying that something is a deepfake.

Deepfake prevention is also going to get harder now that Meta-owned social media platforms, particularly Facebook and Instagram, have stopped fact-checking.

AI and Generative Adversarial Networks (GANs) are becoming too good at making fake documents, and even real videos and audio recordings that look like they were made by humans. This makes it hard to ignore the risk of deepfake. Deepfakes are a clear and present danger, something the FBI and U.S. Department of Homeland Security (DHS) noted a few years ago.

Deepfakes played a role in the U.S. and other elections worldwide, in 2024.

In New Hampshire, a deepfake audio message of “what sounded like President Biden telling Democrats not to vote in the state's primary, just days away” was released, shockingly by “a Democratic political consultant who said he did it to raise alarms about AI. He was fined $6 million by the FCC and indicted on criminal charges in New Hampshire,” according to NPR.

We are up against deepfakes that can cost organizations millions of dollars in fraud.

We are up against deepfakes that can trick people into believing outright and outrageous lies.

Deepfakes are everywhere. This weaponized misinformation, social engineering, and fraud using deepfakes will only affect more people and create a great opportunity for criminal gangs.

Businesses, the media, and governments need to take more steps to actively stop and prevent more damage being done by deepfakes.

If your organization doesn’t have deepfake prevention in place, you need it sooner rather than later.

How deepfake AI’s work in KYC and AML?

AI-generated IDs (driver's licenses, passports, etc.) and fake personal details are being used to create accounts and give fraudsters, AI bots, and criminals access to banks, travel websites, airlines, and gaming platforms. These deepfake IDs can bypass regular KYC systems.

Anything that needs an account to access and use can now be accessed thanks to deepfakes.

Tessa Bosschem, a Cyber and Information Security Specialist at Barclays Private Bank says: “The sophistication of deepfakes is growing, and with it, the threat to individuals everywhere. These fake videos and audio clips can manipulate reality, but many people are still unaware of the danger. And as AI technology only gets better, we can expect to see even more realistic and convincing deepfakes in the future.”

Think about what customers need to gain access to your company’s services:

- An ID document

- Email address

- Password

- An address

- Contact phone number

- Possibly biometrics, such as a facial scan, or the ability to do a Liveness check.

Now, if AI can create all of the above, including a facial scan or short video of a real customer, then potentially a deepfake can be used to gain access to your services.

For as long as KYC and AML have existed, criminal gangs have been trying to circumvent and gain access to everything from bank accounts to corporate email accounts. Especially when money is involved, or they can steal something worth stealing.

With deepfakes and the widespread access to software that makes deepfakes possible, criminal gangs, cyber criminals, and hostile nations have the tools to break through KYC and AML safeguards.

This is the danger organizations everywhere are facing.

What cybercriminals do when they gain access depends on the purpose behind their breaking and entering using a deepfake. We will address potential motives further in this article.

Let’s take a closer look at deepfakes in action, before considering how businesses can implement more stringent deepfake safeguards.

Examples of deepfake attacks

The impact of deepfakes is already enormous, currently costing U.S. consumers and businesses over $12.3 billion. This covers dozens of different AI-generated scams, such as fake investment opportunities to deepfake videos of real customers being used to gain access to bank accounts.

Deloitte’s Center for Financial Services predicts “that gen AI could enable fraud losses to reach US$40 billion in the United States by 2027, from $12.3 billion in 2023, a compound annual growth rate of 32%.”

Email deepfake fraud, using similar principles to phishing scams, is already responsible for $2.7 billion in losses. In 2022, the FBI’s Internet Crime Complaint Center recorded “21,832 instances of business email fraud.”

Deloitte projects that this will only get worse in the next couple of years: “Generative AI email fraud losses could total about US$11.5 billion by 2027 in an “aggressive” adoption scenario.”

A recent example of this is a Chinese-created biometric deepfake AI known as “GoldPickaxe.”

GoldPickaxe works on Apple iOS and Android-powered devices. It presents itself as a government service app, encouraging people to scan their faces.

Using those facial scans, the malware, with help from cybercriminals and AI bots, identifies victims' bank accounts, and attempts to gain access.

Unfortunately, even the most advanced biometric security checks at Southeast Asian banks can be fooled. One early victim of this scam in Vietnam has lost $40,000, according to reports on this deepfake malware attack.

In December 2023, scammers used fake videos of Australian government officials to get money from people using a fake investment offer.

The videos showed Australia's Treasurer Jim Chalmers, a prominent news presenter, Peter Overton, and former Reserve Bank Governor Philip Lowe.

Considering the high-profile nature of the people in the video, and the claims being made ⏤ “The Australian Securities Exchange along with the Reserve Bank have initiated groundbreaking measures to enhance the well-being of Australians!” ⏤ it’s not surprising that some people were scammed by it.

Even if a deepfake video is bad and obvious to anyone who is not sure about it, it doesn't have to fool everyone. All it takes is a handful of people to hand over money to the criminals to gain a profit for their efforts.

We could go on and on.

There are already too many examples of successful deepfake attacks, scams, and cases of fraud to count. It’s safe to assume that deepfake tricks in some way far more people than actually report the incident.

Let's look more closely at how you can prevent deepfakes to protect your employees, customers, money, and sensitive data.

How to implement deepfake prevention

There are numerous ways to implement deepfake prevention. Deepfake technology is getting better, but so are deepfake detectors. Businesses already use these tools to stop fraud and ensure regulatory compliance.

Using Checkin.com for deepfake prevention is one way to verify a real person compared to an AI-generated profile, ID, or even a video.

Deepfake prevention means making sure that your customers are actually who they claim that they are. One way to prevent fraudulent behavior at the account creation or login stages is to use multi-factor authentications.

The following are Checkin.com solutions that can be used for deepfake prevention:

- Deepfake detection: Checkin.com provides advanced AI-based solutions for detecting synthetic images (deepfakes, face swaps, etc.) during every Liveness check. For every face checked we instantly get a score of the likelihood it was generated and then the system blocks verification attempts in real time.

- Liveness: Real-time detection that’s a robust tool in the fight against deepfakes. This instantly assesses how a video is presented to the camera: Picture, video, injection, or screen capture. To ensure someone is who they claim, this should be a real-time “live” video.

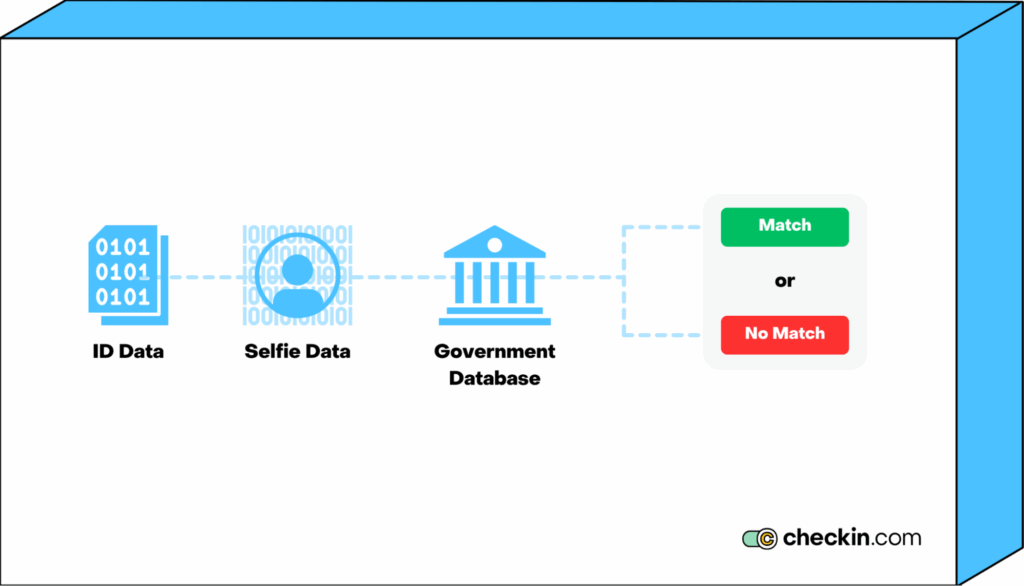

- Face Recognition: This detects if there is a human face in front of the camera — such as its size and position — and then 1-1 or 1-N matching depending on what’s required.

Checkin.com offers many other solutions such as One Time SMS and many local strong online authentications (such as BankID in Sweden) to enhance the security of onboarding or sign-in. All of which contributes to fraud prevention, including stopping deepfakes from accessing your customer accounts.

Deepfake prevention for the Finance/crypto sector

In the financial services and crypto finance sectors, the main risk factor is that cybercriminals will use videos of real customers ⏤ as we’ve already seen in South East Asia, using the GoldPickaxe AI ⏤ to trick facial recognition software and take money from people’s accounts.

There are three ways to combat this:

- Educate your customers, raising awareness about the dangers of deepfakes, and how to prevent themselves from getting scammed.

- Educate your staff about the dangers of deepfakes. Help prevent employees from getting scammed, including and especially those with access to internal accounts and funds.

- Implement more effective deepfake prevention software, such as Checkin.com’s Liveness and Face Recognition products.

Deepfake prevention for the travel sector

In the travel sector, deepfakes and AI can be used to create fake travel and property listings.

Scammers could have AI tools to write descriptions, create images, and even deepfake videos of the perfect-sounding property. All it would take is an Airbnb listing and they could be raking in thousands from unsuspecting consumers.

When the scams are detected ⏤ as it would be eventually, when real people turn up to discover their booking was in a non-existent property ⏤ the scammers would just start again with another fake listing.

Cybercriminals could also use deepfake videos and IDs to create fake accounts and profiles, book holidays and trips, and then use refund processes to launder money.

There are numerous ways that cybercriminals can profit from AI and deepfakes in the travel sector.

Brands in the travel sector would benefit from strengthening their internal and customer-facing deepfake detection technology, while also educating customers and staff about the dangers of deepfakes.

Deepfake prevention for the iGaming sector

In the iGaming sector, deepfakes are being used to create fake user accounts. Once accounts are created, AI bots are deployed to commit fraud such as bonus abuse, using online games to launder money, and other malicious acts that cost the iGaming industry billions.

iGaming brands must have robust deepfake detection at the account activation, onboarding, KYC, and AML stages. The more AI-generated accounts you can prevent from being opened, the lower the risk that fraud can be committed by cyber criminals.

Key Takeaways: Deepfake prevention

Deepfake prevention isn’t an easy task. It requires a combination of education, instinct, and technological solutions to fight a technology-created problem.

- Deepfake scams, whether videos that go viral (usually powered by AI bots to push them further) on social media, email scams, or even deepfakes using apps that take videos of real customers, have already cost U.S. businesses and consumers over $12 billion.

- Deepfakes are a real and present security danger to countries and businesses worldwide.

- Deloitte expects that deepfake fraud and scams could soon cost U.S. businesses and consumers over $40 billion by 2027.

- Celebrities are the most popular people to be turned into deepfakes, and despite the obvious low-quality nature of many of these deepfake videos they still scam enough people to make them worth producing.

- With so many people posting videos of themselves on social media, it’s easier than ever to create a deepfake video of almost anyone.

- The same is true of high-profile business people. If your company or CEO is high-profile, it makes it more likely that you could be targeted by a deepfake scam. Much like the unfortunate finance officer in Hong Kong who sent $25 million to scammers because they thought they were talking to the company CEO on a video call.

- With the right combination of education, instincts, and deepfake prevention tools, you can combat this threat against your company, employees, and customers.

Take your deepfake prevention to the next level with Checkin.com. Secure identity verification from any device in seconds.

Find out more about our Liveness and Face Recognition products, and how we can seamlessly integrate deepfake prevention into your KYC, AML, and Onboarding customer systems.