Deepfake detection techniques and technology are fast becoming this year’s must-have for cybersecurity teams.

Deepfakes are everywhere, and they’re already costing U.S. consumers and businesses over $12.3 billion.

Out of that, fraud losses caused by deepfake phishing scams are already causing $2.7 billion in losses. In 2022, the FBI recorded 21,832 business email fraud crimes.

The deepfake detection challenge will only get worse in the next couple of years, with generative artificial intelligence (AI) email fraud losses totalling around $11.5 billion by 2027.

Deepfake video, email, and audio messages are the most dangerous things to come out of the rising popularity of generative AI tools and models. Generative adversarial networks (GANs) are among the most effective AI models that can create fake content, such as video and audio deepfakes.

Businesses and people need to fight back. Otherwise, this is only going to get worse, and more and more of the world’s GDP is going to be lost to deepfake-wielding cybercriminals and hostile states.

Tessa Bosschem, a Cyber and Information Security Specialist at Barclays Private Bank says: “The sophistication of deepfakes is growing, and with it, the threat to individuals everywhere. These fake videos and audio clips can manipulate reality, but many people are still unaware of the danger.

The real danger, as Tessa says isn’t just in the present: “As AI technology only gets better, we can expect to see even more realistic and convincing deepfakes in the future.” As deepfake generation techniques get smarter, deepfake detection algorithms and techniques need to improve consistently and constantly to counteract this threat.

In this article, we see how advanced our current deepfake detection techniques are. We’ll also cover how they will need to change to fight against more advanced deepfakes.

Why deepfake detection techniques are mission-critical for cybersecurity

AI in its current form, which is generative, has only been around for a few years. This is true for OpenAI, DeepSeek, Google's Gemini, Anthropic Claude, and many others.

And yet, $1 trillion has already been invested in the AI model, software, and hardware sectors over the last few years. Investments are likely to continue at a pace over the next few years.

The market leader, Microsoft-backed OpenAI is worth $150 billion, and PWC predicts that AI could: “Contribute up to $15.7 trillion to the global economy in 2030, more than the current output of China and India combined.”

Every innovation and invention has created opportunities for criminals, gangs, scammers, and hostile countries. AI is no exception to that rule.

Despite current AI models only being around for a few short years, they’ve already cost U.S. consumers and businesses over $12.3 billion in scams and cyberattacks.

Deepfakes are by far the most dangerous iteration of these risks currently. If this is the damage deepfakes and AIs have done so far, imagine 5 years from now.

How much damage ⏤ financial, emotional, and legal ⏤ could deepfakes and AIs cause if we don’t beat them with robust deepfake detection algorithms and techniques?

There are several reasons this is fast becoming mission-critical:

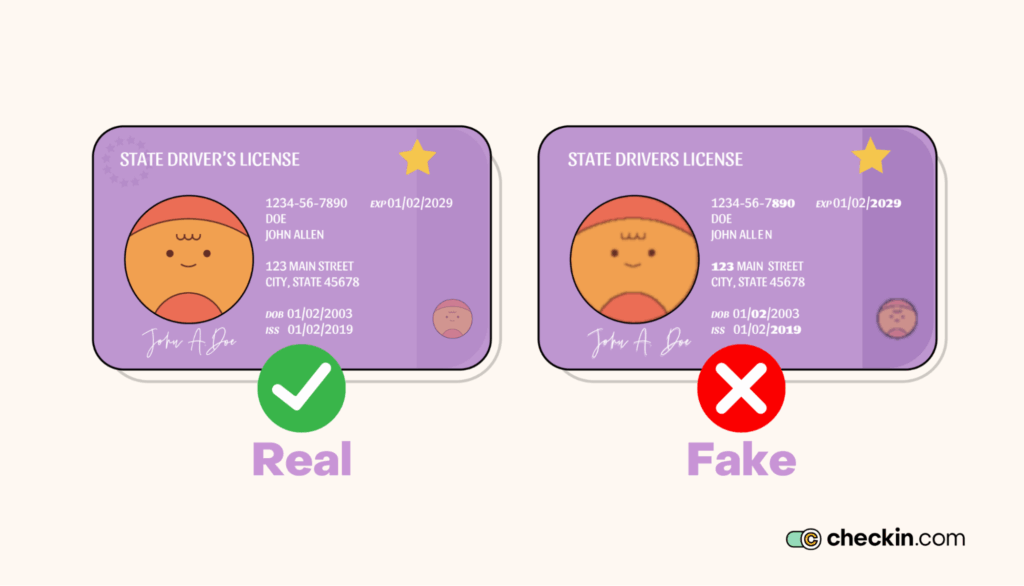

- If deepfakes can beat your Know Your Customer (KYC), Anti-Money Laundering (AML), and other compliance-based onboarding processes, then anyone can create an account with your company.

- This means criminals can launder money or worse, use customer accounts.

- Once a criminal group has access to your business's customer-facing parts, how long will it take before they reach internal systems and important data? That’s the danger, and your organization could face similar risks as that company in Hong Kong that lost $25 million to a deepfake video call.

- Cyberattacks are getting more expensive, currently costing organizations an average of $4.88 million in 2024.

- Your business could lose money through scams, data theft, or any number of other ways criminals can steal funds they’ve illegally accessed.

- Your accounts could be used for money laundering, thereby contributing to criminal organizations, terrorism, and even wars.

- Any regulatory and compliance breaches could cost you more money in fines and lawsuits once they become public.

- Customers could also lose confidence in you, and you could lose money if sensitive data and information were stolen as a result of a deepfake-based cyberattack.

Deepfakes are the most dangerous new cyberattack vector to threaten organizations of every shape and size in recent years.

Training people to spot a deepfake is an important step towards counteracting these threats, as is using deep learning models in deepfake detection technology.

How convincing are deepfakes?

An example of a deepfake already known to be circulating is a Chinese AI malware known as “GoldPickaxe.” GoldPickaxe is an AI model responsible for creating deepfakes of unsuspecting users on both Apple iOS and Android-powered devices.

On the surface, GoldPickaxe looks and behaves like a government service app, asking people to scan their faces.

Using those facial scans, the AI can generate facial recognition videos and images that can be used to deceive two-factor authentication (2FA). Cybercriminals can use a real scan from a fake app to prove a real customer's logins. This gives them access to someone's bank accounts, passwords, and money.

GoldPickaxe is negatively impacting consumers and their banks in South East Asia. One early victim of this scam in Vietnam has lost $40,000.

Another example of how effective deepfakes are comes from Australia, where scammers used deepfake videos of Treasurer Jim Chalmers, news presenter Peter Overton, and former Reserve Bank Governor Philip Lowe to scam money out of thousands of people using a fake investment offer.

Techniques and tools for spotting deepfakes

Deepfakes are convincing enough, both to fool people watching videos on social networks, and even 2FA designed to prevent criminals from gaining access to consumers' accounts.

However, one of the reasons they’re convincing right now isthat not enough people know how to spot a deepfake. Raising awareness and deploying training for employees is integral to the strategy that combats the deepfake threat.

Here are 10 things to look out for if you’re looking at a video and aren’t sure, “Is this real or a deepfake?”:

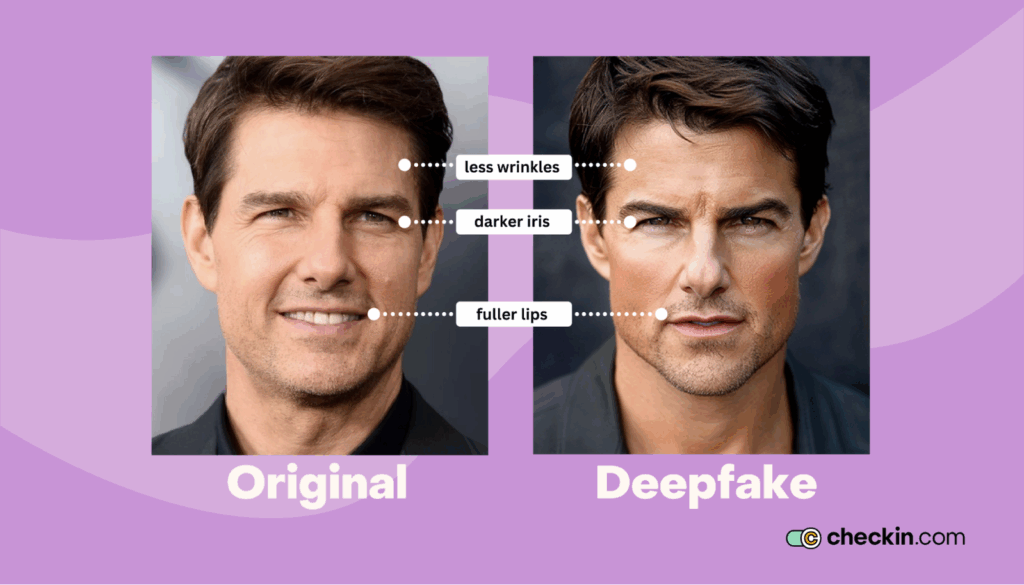

- Out-of-sync facial movements. Our facial movements are natural and fluid. A deepfake will have facial movements that don’t seem natural because parts of the video will be AI-generated or edited using AI from multiple videos.

- Unnatural blinking and lip movements. For the same reasons as above, if the eye and lip movements are out of sync, AI could be the reason.

- Skin texture isn’t regular. Generative AI still can’t get a handle on human skin tones and textures, so that’s another indicator.

- Lighting and shadows don’t match. When foreground and background lighting and shadows aren’t consistent, it could be another sign of a manipulated or AI-generated video.

- Audio and visuals don’t align. This is a big one and an easy one to identify once you’ve seen it a couple of times. When the sound and visuals are out of sync, it’s another red flag.

- Digital forensics. Going beyond the human eye, digital forensics can be used to spot a deepfake from a real video. Although, this is mainly for investigating something after the fact, so it’s not always possible at the moment of a 2FA login attempt.

- Some facial recognition tools can identify a deepfake. This technology isn’t embedded in every facial recognition tool, so it depends on what you’re currently using for logins, KYC, AML, and onboarding.

- Digital footprint analysis. This uses AI/ML to find and track down where elements of fake videos or audio come from. Again, this is more of an investigative tool; however, it’s a sign of where technology is already going in the fight against deepfakes.

- Metadata and artifact analysis. This analysis can also be used to check for inconsistencies. Once a company can investigate, the number of tools to verify a real video vs. a deepfake increases dramatically.

- Audio inconsistencies. Check for audio inconsistencies using audio detection tools, such as speech patterns, background noise, and lip-sync errors.

Alongside training and awareness, organizations need ways to prevent deepfakes from succeeding during onboarding and login flows. Deepfake video detection methods are a crucial part of how we fight back against deepfakes.

Deepfake detection methods are getting smarter and better. They use deep learning algorithms to quickly spot fake image detection created using generative models.

How to implement deepfake detection

When customer onboarding—such as KYC and AML verification—is automated, training, awareness, and investigation tools are only useful after a breach has happened or a crime has been committed.

If there’s no “human-in-the-loop” during onboarding, then an AI-powered layer of deepfake detection tools needs to be deployed to prevent deepfake video or images from gaining access to consumer accounts and internal systems.

Your organization needs automated deepfake detection tools that are integrated into onboarding, sign-up, or login flows.

Some organizations try to embed these separately, which is hard work, more costly, and time-consuming. Having a separate deepfake detection layer is also off-putting to your human customers who aren’t AI-generated and simply want to log in or sign up for your services.

Integrated deepfake detection needs to be:

- Able to detect a deepfake within seconds using cutting-edge deepfake detection technology. This includes being able to spot identity swap (also known as face swap) and expression swap deepfakes.

- Built using an AI model trained on as many deepfake images and videos as possible (1 million+, ideally), with a dataset that contains a wide range of racial, age, and gender diversity.

- Seamlessly integrated with your existing KYC, AML, 2FA, Liveness, and other onboarding and sign-up processes.

- Highly accurate. At the end of the day, an AI-powered deepfake detector is only as good as its success rate, and you need one that’s as accurate as it is fast.

Seeing the exponentially rising threat of deepfakes, we took action to work with technology partners who could integrate with our systems.

Checkin.com’s software now comes with deepfake detection built in as an integral component of our AI-powered security systems. We can give your organization the peace of mind it needs that we are already proactively protecting you from the dangers of deepfakes.

Deepfake detection for the travel sector

In the travel sector, deepfake scams could be used to let people who are on no-fly lists and banned from entering specific countries ⏤ , such as criminals and terrorists ⏤ to travel. This could put passengers, crew, and commercial assets in danger, and you risk numerous security and compliance failures.

It’s crucial to have robust deepfake detection systems and training in place to safeguard your customers and staff and stay legally compliant.

Deepfake detection for the Finance/crypto sector

In the finance and crypto sector, deepfakes can be used to scam senior executives out of money or gain access to customer accounts to either steal or launder funds.

A 2024 Report by the FS-ISAC Artificial Intelligence Risk Working Group illustrated numerous deepfake threat vectors and dangers the financial services sector faces. This makes deepfake detection even more critical, and yet the report shows that “6 in 10 [financial sector] executives say their firms have no protocols regarding deepfake risks.”

Clearly, the issue of deepfakes needs action sooner rather than later in the financial sector.

Deepfake detection for the iGaming sector

In the iGaming sector, deepfakes are being used to manipulate accounts and scam money out of gaming companies using bonus abuse tactics. Fraud, including deepfakes, are costing the sector $1 billion annually.

With deepfakes, criminals can create dozens, even hundreds of accounts, and out of those, they can make piles of money in fraudulent bonus payouts. This is another sector where deepfake detection is needed sooner rather than later.

Key Takeaways: Deepfake detection techniques

The threat from deepfakes is only going to get worse. As AI evolves, deepfakes will only get more sophisticated.

We are in an AI arms race. Criminals, gangs, and hostile nations on one hand, and technology providers, businesses, and consumers on the other. Training and awareness are as important as deepfake detection technology that can accurately spot a real human from an AI-generated or manipulated image, video, or audio.

For the financial sector, travel, and iGaming industries, deepfake detection tools that are seamlessly integrated with onboarding, KYC, AML, and 2FA systems are essential to safeguard the organization, customers, staff, and stakeholders.

Protect yourself from deepfakes with Checkin.com’s deepfake detection technology.